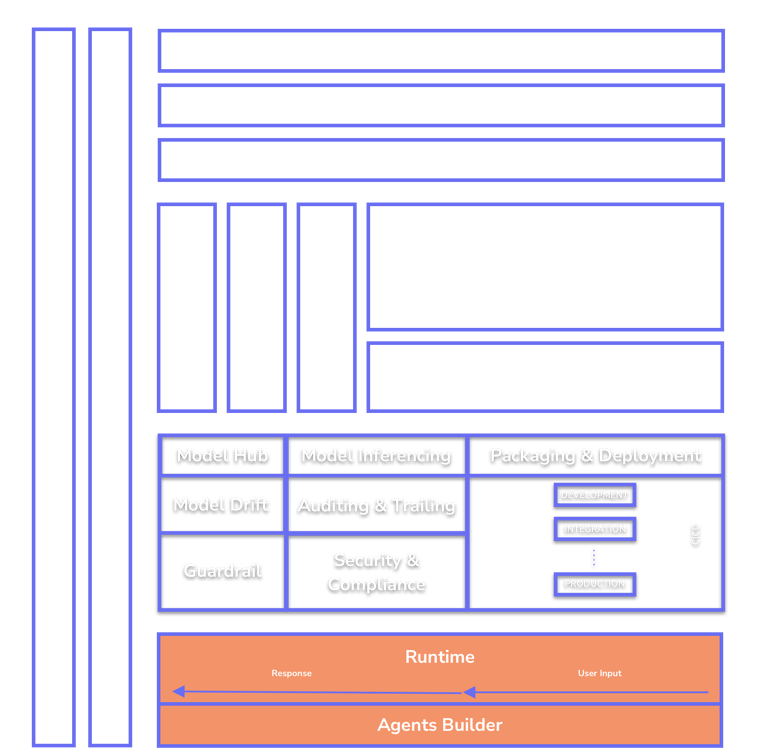

Empower LLM Pipeline

Streamline your AI model lifecycle from data to deployment with our private LLM platform.

Runtime Playground

When an LLM is deployed and running in production, we provide a unique API as a byproduct of the deployment, allowing seamless integration with other applications. To enhance usability, we offer a playground portal where users can test and interact with the API in real time. This portal enables users to experiment with runtime configurations such as response length, temperature, and token limits, adjusting them on the fly for optimal performance. Users can start live chat sessions to observe real-time API responses, fine-tune settings, and determine the best integration approach. Additionally, the API can be seamlessly paired with our Agent Builder Portal, allowing users to customize their LLM-driven workflows efficiently.

Agent Builder

The Agent Builder is designed to accelerate the deployment of LLM-based solutions and seamlessly integrate them into various operations for enhanced task performance. To ensure efficiency, users must define clear task objectives, especially when model-driven decision-making is required at scale. Our platform enables users to create dynamic workflows using industry-standard patterns such as prompt chaining, routing, parallelization, orchestrator-workers, and evaluator-optimizer models. It also supports agent-based approaches for open-ended problems where multiple iterations are required, making it impractical to predefine a fixed execution path. With a drag-and-drop interface, users can easily integrate the LLM runtime API with other tools, defining custom rules and conditions for their workflows, ensuring adaptability and automation without complex coding.

AI catalysts are essential for every data-driven company

Unlock the full potential of shipping secure AI products

Driving innovation with AI for global business excellence

© 2026. All rights reserved.

LLMOPS Platform