Accelerate the Delivery of DevOps / LLMOps with Multi-Agent Platform

Streamline DevOps and generative AI model lifecycles across your hybrid architecture with a network of intelligent AI agents: purpose-built to eliminate manual overhead.

Empower your teams to monitor, auto-heal, and optimize systems effortlessly, delivering high availability and exceptional resilience at every layer.

Trusted by the world’s leading organizations

We empower enterprises to scale efficiently through AI-driven automation across the full Agentic DevOps pipeline.

High Availability

AI agents ensure high availability through capacity planning, fault tolerance, and disaster recovery readiness—minimizing outages and keeping business-critical services resilient and reliable.

Simply install our solution, enforce best practices effortlessly across environments, and see how AI-powered automation maximizes your ROI.

AI automation reduces manual work, optimizes resources, and lowers downtime costs. It helps cut infrastructure waste, speed up recovery, and reduce DevOps team burnout, improving efficiency and ROI.

Cost Efficiency

Faster Delivery

AI agents automate pipelines, infrastructure, and incident handling to speed up release cycles and eliminate bottlenecks—accelerating delivery, boosting agility, and reducing time-to-market.

Automated Compliance

Enforce security, governance, and best practices automatically across environments. AI ensures every deployment meets compliance requirements and standards with zero manual overhead.

AI agents enable early detection of issues, trigger automated alerts, and self-heal systems to reduce MTTR. Faster incident resolution improves system reliability and reduces operational stress.

Smart Incident Response

AI-powered reports and virtual assistants enhance communication and visibility across Engineering, Product Management, Business, and Customer Support teams, enabling faster and smarter decision making.

Better Collaboration

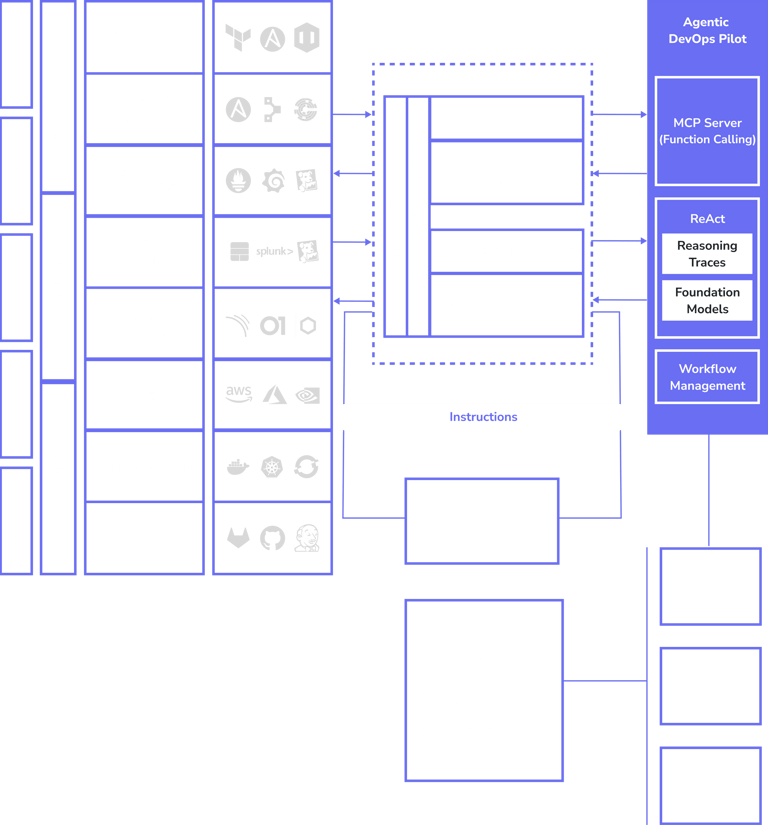

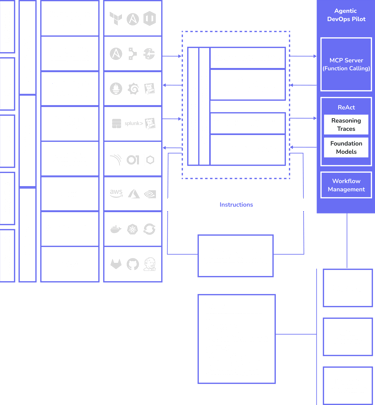

End-to-End DevOps Large Action Model

DevOps is the foundation of modern software delivery, automating infrastructure, deployments, and operations to ensure system reliability and security. Yet, DevOps teams are under pressure as they face high availability challenges, rising costs, slow pipelines, and growing incident volumes. The stakes are high—downtime costs up to $300K per hour, 70% of outages stem from human error, and cloud waste reaches billions annually. Despite the market growing towards $81B by 2033, DevOps teams are overwhelmed by complexity, inefficiency, and burnout, making automation and intelligent solutions more critical than ever.

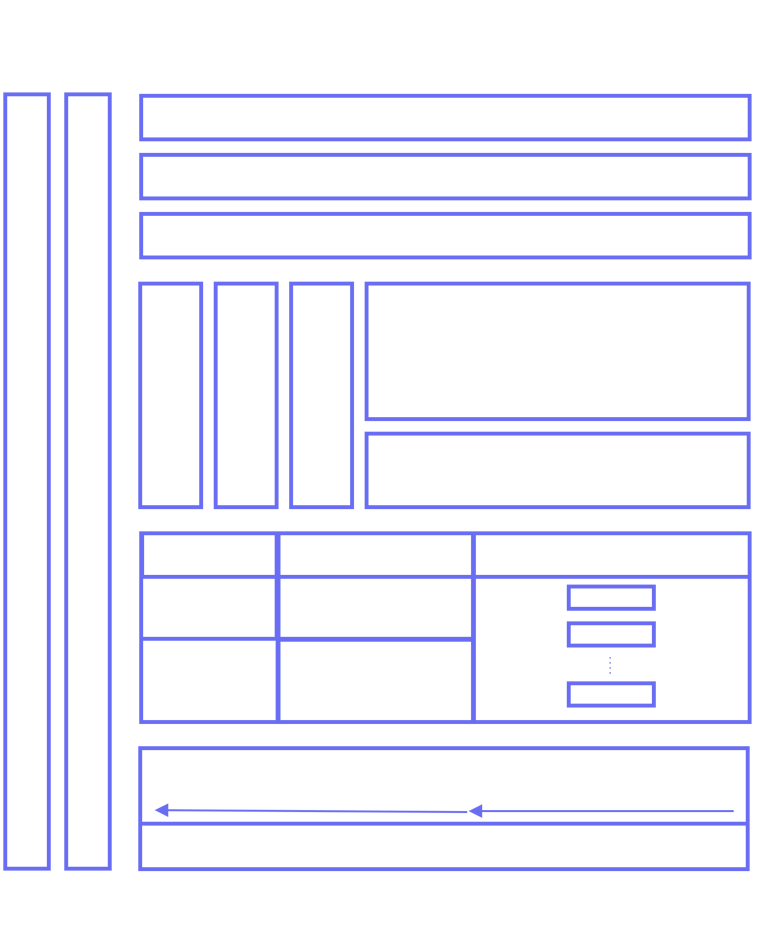

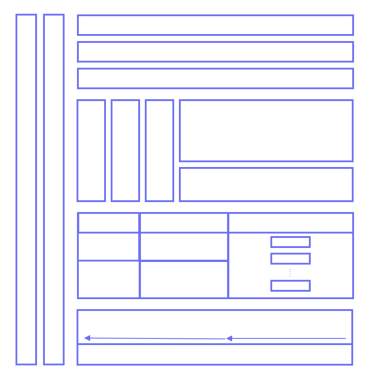

DevOps teams today face increasing complexity, rising costs, and the constant pressure to deliver faster while maintaining high availability and security. We empower these teams with intelligent AI agents that automate, optimize, and secure the entire DevOps lifecycle—reducing downtime, accelerating delivery, and improving efficiency. Our AI-driven autonomous DevOps Optimization Platform leverages Large Action Models (LAMs) and hybrid techniques like React, Plan & Execute, ReWOO, RAISE, and Reflexion to automate tasks across infrastructure, CI/CD, observability, and security layers. It enforces best practices, integrates human-in-the-loop validation, and continuously self-improves using reinforcement learning and customizable prompt templates. Complementing this, our AI-driven HA & Incident Management Platform provides self-healing, proactive monitoring, and intelligent incident handling. With stateful graphs, persistent learning, and pattern recognition, the system enables preemptive fixes and reduces failure rates—ensuring enterprises achieve scalable, resilient, and highly automated DevOps operations.

Purpose-Built to Empower AI Teams at Every Stage of LLMOps

AI Orchestration

We automate resource, data, and infrastructure management, optimizing CPU, GPU, and storage while providing real-time alerts for efficient AI workflows. Our data governance and transformation tools enable seamless API, stream, and batch serving. With hybrid cloud-on-prem automation powered by Kubernetes, we ensure scalable and efficient AI pipelines.

We streamline the LLM lifecycle from model building to deployment with automation and real-time insights. Our LLM Factory enables fine-tuning, prompt engineering, and cost-efficient training, while the Benchmark Center tracks 40+ key metrics. With DAG-based pipelines, continuous feedback loops, and real-time monitoring, we ensure faster, optimized, and scalable AI operations.

We streamline model packaging, deployment, and monitoring with Kubernetes-based orchestration and CI/CD pipelines for high availability. Our observability tools track latency, accuracy, and drift detection, ensuring optimal performance. We automate auditing, compliance checks, and security enforcement, integrating guardrails for responsible AI to prevent bias, harmful content, and unauthorized access.

AI Flow Automation

AI Post Training

Our Model Tracker streamlines model governance by managing versioning, approvals, and documentation in a unified platform, ensuring full lifecycle visibility for developers, product managers, and stakeholders. Our Risk & Vulnerability Manager continuously monitors security threats, including data breaches, model poisoning, and API risks, providing proactive risk mitigation to ensure safe and compliant AI deployment.

Governance

Cost Management

We provide real-time cost monitoring, predictive analytics, and rightsizing automation to help enterprises optimize AI expenditures. Our Budget Management Tool tracks GPU and resource usage across hybrid environments, offering insights to reduce CAPEX and improve cost efficiency. The Rightsizing Portal identifies inefficiencies, optimizes workloads, and secures the best pricing to maximize AI cost-effectiveness.

Runtime and Agents Builder

Our Runtime Playground provides a real-time API testing environment, allowing users to adjust runtime configurations like response length and temperature, fine-tune performance, and integrate LLMs seamlessly. The Agent Builder enables users to create dynamic workflows using prompt chaining, routing, and orchestration patterns, offering drag-and-drop automation for scalable AI-driven operations.

LLMOps Platform

LLM adoption has been widespread across data-driven organizations of all sizes. The demand for pre-trained models is high, with some organizations training their own models if the budget allows. Privacy, ownership, and SLAs are major concerns for companies, who prefer an architecture-agnostic approach with the flexibility to lift and shift as needed.

Our solution is a multi-faceted LLMOps platform that helps developers with data acquisition and preparation, utilizes different modalities for model training (RAG, Prompt Engineering, Fine-Tuning, Full Training), evaluates performance, automates model inference, and integrates the LLM solution into products through systematic CI/CD.

Our platform accelerates this process by 270% at 10x less cost. Below, we cover the main building blocks of our solution.

We empower enterprises to unlock their full AI potential with our automated LLMOps platform.

Data Privacy

Developers have the freedom to choose where to train and deploy LLM models. Our platform is environment-agnostic and adheres to the highest security standards.

Automation

Our no-code, zero-code LLMOps platform automates the entire AI lifecycle—from data ingestion and training to inference and deployment—streamlining your journey to powerful AI solutions.

Simply install our solution, deploy it on your dataset in any environment, and watch your AI capabilities soar effortlessly

Cost

With our LLMOps platform, customers optimize costs through flexible resource utilization, leveraging infrastructure and models designed to minimize GPU usage without compromising performance.

Governance

Our LLMOps platform includes a centralized governance hub, empowering product owners to seamlessly track model development, ensure compliance with auditing standards, and monitor performance for continuous improvement.

Resiliency

Our LLMOps platform incorporates DevOps best practices to simplify integration, minimize latency, and ensure high availability for your deployed models, delivering reliable and seamless performance.

Control

AI engineers can effortlessly benchmark models for enhanced accuracy while mitigating LLM issues such as hallucinations, restricted topics, and harmful content, ensuring reliable and responsible AI performance

Accelerate the Delivery of LLM-Based Products

Streamline the generative AI model lifecycle with our in-house LLMOps platform, designed for tailored, no-code solutions.

Empower your business to build, customize, and deploy powerful LLM models effortlessly—offering complete control and unmatched efficiency.

We empower enterprises to unlock their full AI potential with our automated LLMOps platform.

Automation

Our no-code, zero-code LLMOps platform automates the entire AI lifecycle—from data ingestion and training to inference and deployment—streamlining your journey to powerful AI solutions.

Simply install our solution, deploy it on your dataset in any environment, and watch your AI capabilities soar effortlessly

Developers have the freedom to choose where to train and deploy LLM models. Our platform is environment-agnostic and adheres to the highest security standards.

Data Privacy

Cost

With our LLMOps platform, customers optimize costs through flexible resource utilization, leveraging infrastructure and models designed to minimize GPU usage without compromising performance.

Governance

A centralized governance hub, empowering product owners to seamlessly track model development, ensure compliance with auditing standards, and monitor performance for continuous improvement.

Our LLMOps platform incorporates DevOps best practices to simplify integration, minimize latency, and ensure high availability for your deployed models, delivering reliable and seamless performance.

Resiliency

AI engineers effortlessly benchmark models for enhanced accuracy while mitigating LLM issues such as hallucinations, restricted topics, and harmful content, ensuring reliable and responsible AI performance

Control

Intelligent DevOps: The Future of Engineering with AI Agents

Accelerate delivery, boost resilience, and build with confidence